jupyter notebook으로 코드 돌리는데 짜잘한 에러가 자꾸 떠서 오늘은 삽질 좀 했다 😢

✅ 코드 원본 :

GitHub - Trusted-AI/adversarial-robustness-toolbox: Adversarial Robustness Toolbox (ART) - Python Library for Machine Learning S

Adversarial Robustness Toolbox (ART) - Python Library for Machine Learning Security - Evasion, Poisoning, Extraction, Inference - Red and Blue Teams - GitHub - Trusted-AI/adversarial-robustness-too...

github.com

Settings

target_name = 'toaster'

image_shape = (224, 224, 3)

clip_values = (0, 255)

nb_classes =1000

batch_size = 16

scale_min = 0.4

scale_max = 1.0

rotation_max = 22.5

learning_rate = 5000.

max_iter = 500

패치가 부착된 이미지는 target_name에 정해진대로 분류된다. toaster 말고 다른 걸로 몇 번 바꿔봤는데 다 toaster일 때보다 성능이 안 좋았다.

target_name 종류는 https://github.com/nottombrown/imagenet-stubs/blob/master/imagenet_stubs/imagenet_2012_labels.py 에서 확인 가능!

Model Definition

model = tf.keras.applications.resnet50.ResNet50(weights="imagenet")

mean_b = 100

mean_g = 110

mean_r = 120

tfc = TensorFlowV2Classifier(model=model, loss_object=None, train_step=None, nb_classes=nb_classes,

input_shape=image_shape, clip_values=clip_values,

preprocessing=([mean_b, mean_g, mean_r], np.array([1.0, 1.0, 1.0])))

분류기와 색상 값을 정해준다.

Adversarial Patch Generation

ap = AdversarialPatch(classifier=tfc, rotation_max=rotation_max, scale_min=scale_min, scale_max=scale_max,

learning_rate=learning_rate, max_iter=max_iter, batch_size=batch_size,

patch_shape=(224, 224, 3))

label = name_to_label(target_name)

y_one_hot = np.zeros(nb_classes)

y_one_hot[label] = 1.0

# y_one_hot 배열을 이용해 images.shape[0]행, 1열을 만든다.

# y_one_hot 내용이 반복되는 형태

y_target = np.tile(y_one_hot, (images.shape[0], 1))

patch, patch_mask = ap.generate(x=images, y=y_target)

art/attacks/evasion에 정의된 AdversarialPatch(~)를 사용하면 알아서 패치를 생성해준다. patch_shape는 224*224 크기의 컬러 패치를 생성한다는 의미이다.

생성된 패치는 위와 같고 자세히 보면 토스터기 형태가 들어가있는 것을 확인할 수 있다.

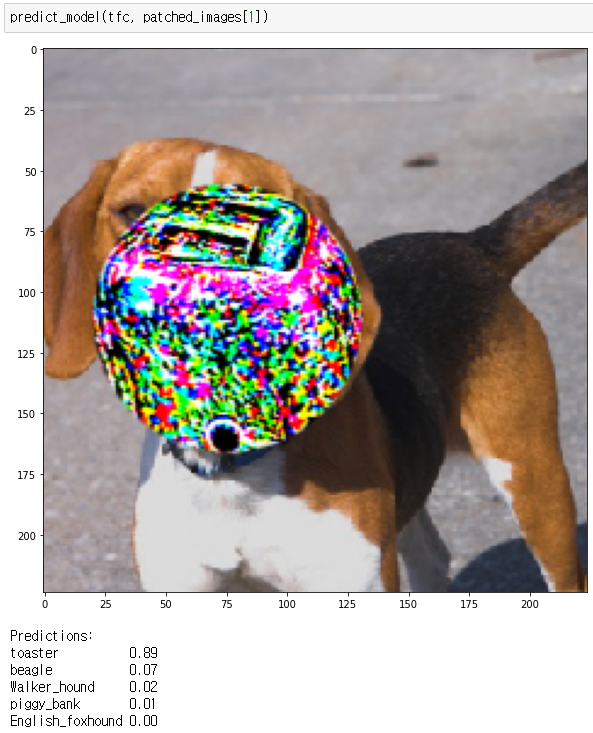

Evaluations

이질적인 뭔가가 추가됐다는 게 많이 티나지만 이미지에 패치가 부착된 후 모델이 목표 타겟이었던 토스터기로 분류하고 있다.

(정답 레이블은 bagel과 beagle)

'👩💻' 카테고리의 다른 글

| [코드 리뷰] 노년층 대화 감성 분류 모델 구현 (2) : RNN (0) | 2022.12.21 |

|---|---|

| [코드 리뷰] 노년층 대화 감성 분류 모델 구현 (1) : CNN (0) | 2022.12.13 |

| [ART] attack_defence_imagenet.ipynb 코드 실습 (0) | 2022.01.18 |

| [ART] adversarial_training_mnist.ipynb 코드 분석 (0) | 2022.01.12 |

| [ART] ART for TensorFlow v2 - Callable 코드 분석 (0) | 2022.01.03 |