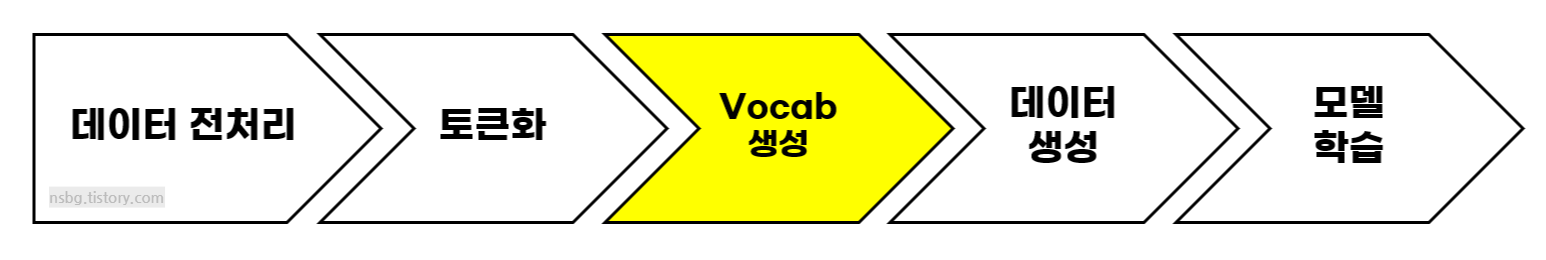

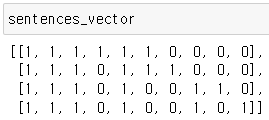

개념 단어 집합(vocab)에 있는 단어 각각을 실수(real number)로 이루어진 dense vector로 표현하는 것 방법 ※ PyTorch 기준 1) Embedding layer 생성: nn.Embedding 사용 2) Pre-trained word embedding: 사전 학습된 워드 임베딩(Word2Vec, GloVe 등)을 가져와서 사용 예시 1) Embedding layer 생성 ① nn.Embedding layer 없이 직접 구현(셀 순서대로 진행됨) import torch train_data = 'I want to be a AI engineer' # 단어 집합 생성(중복 제거) word_set = set(train_data.split()) # 각 단어에 고유한 정수 부여 vocab =..